Event Branches

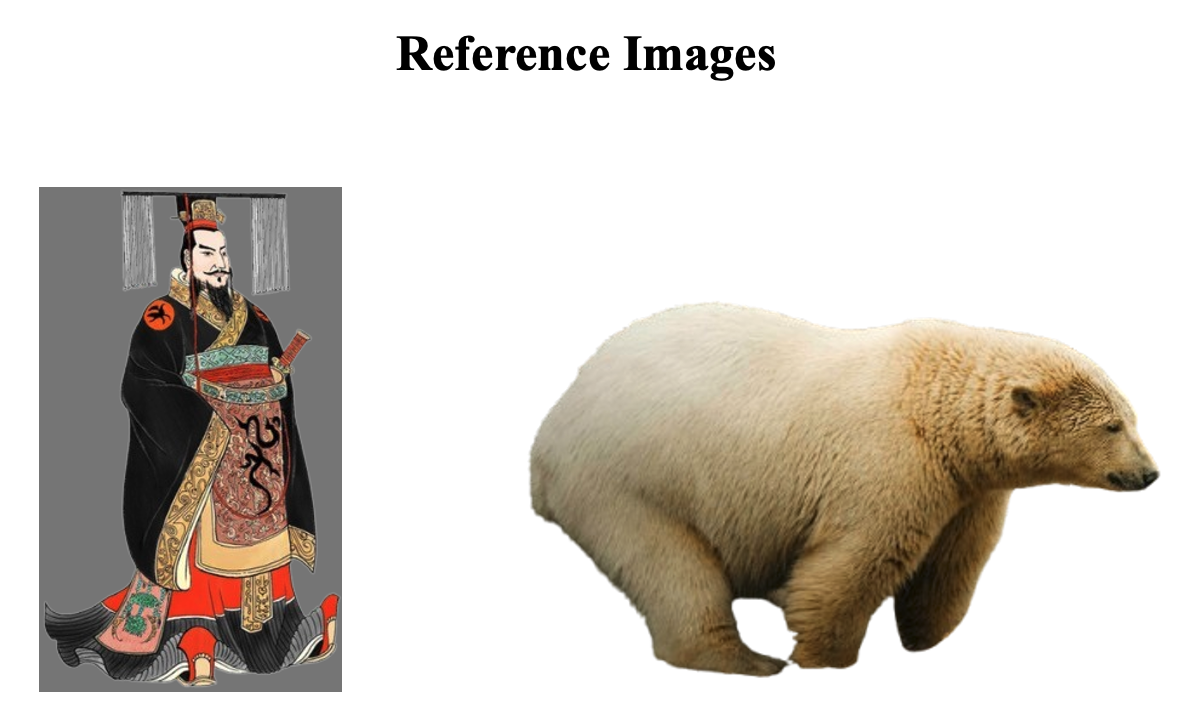

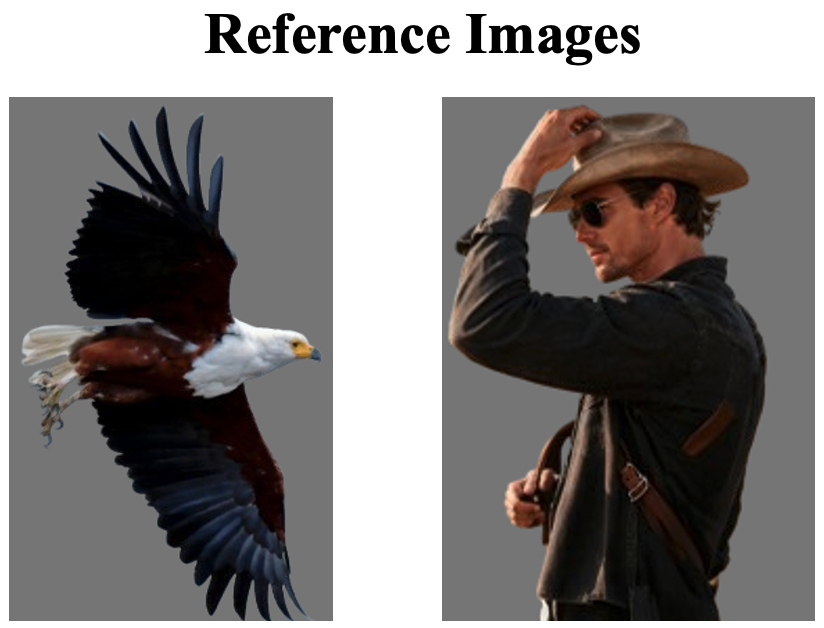

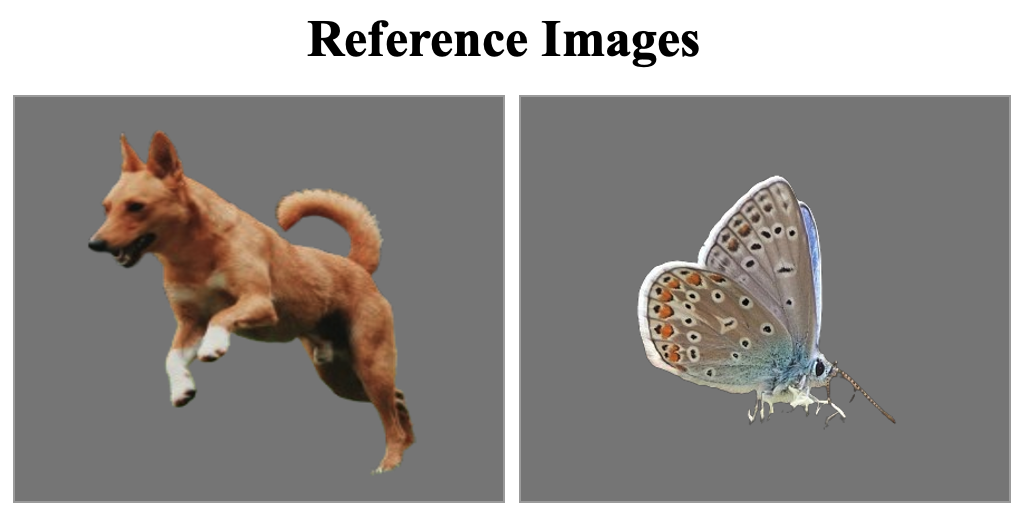

We present WorldCanvas, a framework for promptable world events that enables rich, user-directed simulation by combining text, trajectories, and reference images. Unlike text-only approaches and existing trajectory-controlled image-to-video methods, our multimodal approach combines trajectories—encoding motion, timing, and visibility—with natural language for semantic intent and reference images for visual grounding of object identity, enabling the generation of coherent, controllable events that include multi-agent interactions, object entry/exit, reference-guided appearance and counterintuitive events. The resulting videos demonstrate not only temporal coherence but also emergent consistency, preserving object identity and scene despite temporary disappearance. By supporting expressive world events generation, WorldCanvas advances world models from passive predictors to interactive, user-shaped simulators.

ATI

Wan2.2 I2V

Ours

ATI

Wan2.2 I2V

Ours

ATI

Wan2.2 I2V

Ours

ATI

Wan2.2 I2V

Frame In-N-Out

Ours

Frame IN-N-OUT

Ours

Standard full cross-attention

Hard cross-attention

Ours